Bringing the Big Picture into Focus:

The Future of Remote Sensing

at NOAA

Remote sensing is the science of obtaining information about objects or areas from a distance, typically from aircraft or spacecraft. NOAA scientists collect and use remotely sensed data for a range of activities, from mapping coastlines, to supporting military and disaster response personnel, to monitoring hurricane activity.

In the aftermath of Hurricane Katrina, Gulf coast families were able to determine if their homes were still standing by viewing NOAA aerial photographs online. Researchers along the coast of Florida use NOAA satellite imagery to monitor chlorophyll concentrations in the Gulf of Mexico, to detect the onset of harmful algal blooms. Everyday, pilots navigating into airports in reduced-visibility weather conditions rely on runway approach procedures developed using remotely-sensed data acquired by NOAA. Following the 9/11 terrorist attacks on the World Trade Center, airborne lidar data were used by emergency response personnel to create three-dimensional models of building structures and the surrounding area, helping personnel to locate original support structures, stairwells, etc. These are just a few examples of activities made possible because of remote sensing.

As a leader in the rapidly-evolving field of remote sensing, NOAA continues to seek and refine ways to deliver remote sensing data and products to all users. This story takes a brief look at remote sensing today and considers the exciting challenges and opportunities in remote sensing that NOAA will likely face over the next 20 years.

Remote Sensing Today: An Overview

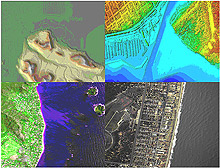

Examples of different types of remotely sensed data. Clockwise from upper left: Interferometric Synthetic Aperture Radar (IFSAR) data, topographic and bathymetric lidar data, digital photography, and hyperspectral imagery, all of coastal regions. Click image for larger view.

Remote sensing is the science of obtaining information about objects or areas from a distance, typically (although not always) from aircraft or spacecraft. Airborne and spaceborne sensors can be classified as either "active" or "passive," depending on whether they use their own source of electromagnetic energy (active) or rely on naturally-occurring energy such as reflected solar radiation (passive). Examples of active remote sensing technologies include radar and airborne light detection and ranging (lidar). Passive remote sensing technologies include aerial photography and satellite imagery.

NOAA remote sensing specialists work to improve the accuracy and speed of delivery of remotely sensed data. Scientists spend much of their time analyzing the technical details of airborne and spaceborne sensors. They also develop methods to process collected data. These "behind the scenes" activities are crucial to delivering information to professionals who use the data to conduct their own work.

Digital aerial photography showing devastation in Ocean Springs, Mississippi. This imagery was acquired by NOAA on August 30, 2005, one day after Hurricane Katrina slammed the region. Click image for larger view.

Today, remote sensing science at NOAA supports a remarkably large number of other disciplines and activities. Geographers, cartographers, foresters, geologists, oceanographers, meteorologists, ecologists, coastal managers, urban planners, military and disaster response personnel, and professionals in a wide variety of other areas all rely on remotely sensed data.

Scientists around NOAA also use remotely sensed data for a range of applications. For example, within the National Ocean Service, important applications of remote sensing include coastal mapping, shoreline change analysis, airport surveying, disaster response, benthic habitat mapping, analysis of harmful algal blooms, monitoring of coastal ecosystems, and nautical charting.

The Future of Remote Sensing: Challenges & Opportunities

The future of remote sensing lies in making numerous types of accurate, current, and high-resolution remotely sensed data and derived geospatial information products readily available for every area of interest. Professionals look forward to the day when data can be easily and quickly imported and analyzed in non-specialized software packages.

To help make this vision a reality, NOAA remote sensing experts are faced with the following challenges over the next two decades:

- improving the accuracy, resolution, timeliness, and ease-of-use of remotely sensed data;

- enhancing "rapid response" capabilities by providing high-resolution data of affected areas immediately following natural and human-induced disasters;

- improving the delivery of remotely sensed data via the Internet, so that users can more easily find and retrieve the exact data and information they need;

- developing ways to fuse data from multiple sensors, to allow multiple projects and programs to improve the quantity and quality of information available to the public;

- maintaining communications with the many users of NOAA remotely sensed data, to support a wide variety of specific applications;

- using new remote sensing technologies to collect data in a more efficient and cost-effective manner so that accurate, current data are available for larger geographic areas; and

- improving software so that remotely sensed data can be quickly and easily processed and analyzed to support NOAA programs and simultaneously yield information needed by professionals.

NOAA will meet the challenges above through continued research and development in emerging remote sensing technologies, including platforms, sensors, and software. By successfully transitioning from research to operations, NOAA anticipates making rapid progress in tackling these challenges. The text that follows explores some of the most promising, up-and-coming remote sensing technologies that NOAA will continue to explore in the future.

Unmanned Aircraft Systems

In April - November 2005, NOAA and NASA conducted the Altair Integrated System Flight Demonstration Project in cooperation with General Atomics Aeronautical Systems, Inc. Click image for larger view and full caption.

Unmanned aircraft systems (UASs) are remotely piloted or self-piloted aircraft that can carry cameras, sensors, or communications equipment. UASs have been used by the military to gather intelligence since the 1950s, but have only recently been used to collect high-resolution spatial data. UASs offer more flexibility than fixed-orbit satellites, and because they are operated remotely, they may prove to be extremely beneficial in disaster response applications, when it is too dangerous to send in a piloted aircraft.

NOAA and the National Aeronautics and Space Administration (NASA) are already partnering to explore UAS technology and the future of this technology is extremely promising for helping to fill data gaps in remote or dangerous areas.

Digital Aerial Cameras

Digital cameras are replacing film-based cameras, changing the way we take pictures in our everyday lives. In the world of aerial photography, there are many more technical challenges involved in designing mapping-quality digital aerial cameras than in everyday hand-held digital cameras. Hence, the transition from film to digital is taking longer in the airborne mapping world. On the positive side, digital aerial mapping cameras offer even more potential advantages than everyday hand-held digital cameras. Disaster response activities at NOAA and elsewhere are already benefiting from the recent emergence of directly georeferenced, high-resolution digital aerial cameras.

Digital aerial cameras increase the speed of availability of aerial photographs. Because images captured by these cameras are already in electronic form, they can quickly be processed and made available to users. The greatest time savings with these cameras comes from their direct georeferencing capability, which allows camera position and orientation to be determined automatically using global positioning system (GPS) and inertial measurement unit (IMU) technology. Using sophisticated software, analysts can quickly generate imagery in which objects are shown in their correct map coordinates. Digital aerial cameras also provide imagery that is more sensitive to shadows and low illumination levels than film photography and digital cameras typically acquire data in both visible and near infrared bands. The improved radiometric performance of digital cameras enables significantly better image classification accuracy.

Airborne Lidar

Airborne lidar is an active remote sensing technology that has generated excitement in recent years because it can efficiently produce very accurate, high-resolution data sets depicting an area's topography.

Lidar systems calculate ranges from the time it takes for laser pulses to travel to and from terrain or elevated features (such as a tree canopy) on the Earth's surface. A scanning mechanism (typically a cross-track scanning mirror) is used to deflect the laser beam and create a swath on the ground. By combining laser ranges and scanner angle data with GPS and IMU data, NOAA can rapidly generate data sets that represent the elevations within an area with decimeter-level vertical accuracy. Bathymetric lidar systems can measure water depths, in addition to terrain elevations, thus increasing the utility of this technology for a range of applications.

Lidar is already being used at NOAA for shoreline mapping, nautical charting, post-storm analysis, and airport obstruction surveying and new applications of this technology are emerging almost daily.

Synthetic Aperture Radar

Yet another active remote sensing technology that holds promise is Synthetic Aperture Radar (SAR). Unlike lidar systems which typically use near infrared and/or visible radiation, SAR systems operate at much longer wavelengths in the microwave region of the electromagnetic spectrum. One advantage of using microwave wavelengths is that data can be collected in almost all weather conditions. Also, as active sensors, SAR systems can be operated day and night. This technology is very attractive for efficient data collection, especially in areas such as coastal Alaska, where cloud cover is persistent throughout much of the year.

Multisensor Data Fusion

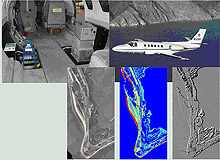

Images from the NGS sensor fusion project. Click image for larger view and full caption.

Perhaps the most exciting area of remote sensing research at NOAA is multisensor data fusion. The merging, or fusion, of remotely sensed data collected simultaneously by active and passive sensors promises to yield more and better information about both the location and characteristics of materials and features on the Earth's surface. Projects completed to date have only begun to scratch the surface of the potential in this area.

Conclusion: Looking Ahead

The technologies discussed above are just a few of the many promising areas of remote sensing research at NOAA. Over the next two decades, NOAA looks forward to working with private industry, academia, other government organizations, and the general public to promote and advance this exciting and rapidly changing field. Advances in remote sensing will enable quicker and more focused emergency response, more accurate map products, improved navigation, and better geospatial information and derived products for the general public and professionals in a wide variety of fields.

Works Consulted

Joint Airborne Lidar Bathymetry Technical Center of Expertise (JALBTCX). (2006). JALBTCX Web site. Retrieved July 7, 2006, from: http://shoals.sam.usace.army.mil.

NOAA Coastal Services Center. (2006). Coastal Remote Sensing Program. Retrieved July 7, 2006, from: http://www.csc.noaa.gov/crs/.

NOAA National Geodetic Survey (NGS). (2006). NGS Remote Sensing Division Web site. Retrieved July 7, 2006, from: http://www.ngs.noaa.gov/RSD/rsd_home.shtml.

NOAA Unmanned Aerial Systems (UAS). (2006). UAS Web site. Retrieved July 7, 2006, from: http://uav.noaa.gov/index.html.

Parrish, C., Woolard, J., Kearse, B., & Case, N. (2004). Airborne

LiDAR Technology for Airspace Obstruction Mapping. Earth Observation

Magazine, 13, No. 4. Retrieved July 7, 2006, from: http://www.eomonline.com/Common/Archives/

2004junlul/04junjul_Airborne.html.

Sault, M., Parrish, C., White, S., Sellars, J., & Woolard, J. (2005). A Sensor Fusion Approach to Coastal Mapping. Proceedings of the 14th Biennial Coastal Zone Conference, July 17-21, New Orleans, Louisiana.

White, S. & Aslaksen, M. (2006). NOAA's Use of Direct Georeferencing to Support Emergency Response [Electronic version]. Photogrammetric Engineering & Remote Sensing, 72, No. 6.

White, S., Sault, M., Parrish, C., Woolard, J., & Sellars, J. (2005).

A Multiple Sensor Approach to Shoreline Mapping. Earth Observation Magazine,

14, No. 5. Retrieved July 7, 2006, from: http://www.eomonline.com/EOM_Jul05/

article.php?Article=feature3.